If you have ever used a chatbot, you have probably also experienced the dreadful “Sorry, I don’t understand.” For the user this basically means: dead end, no points, go back to start and try again. After 3 times, most users give up, leaving them with “chatbots are just dumb” and likely they won’t talk to chatbots again.

Avoiding the #1 Chatbot frustration: “Sorry, I don’t understand.”

As a conversational designer, you know that you should guide users and avoid dead ends. At the same time, you understand that training a chatbot on even a small domain takes time. Even worse: you only really find the edge cases after you go live. So how can you create a good experience in the meantime, at least good enough to go live?

If you are struggling with this question; this article is for you.

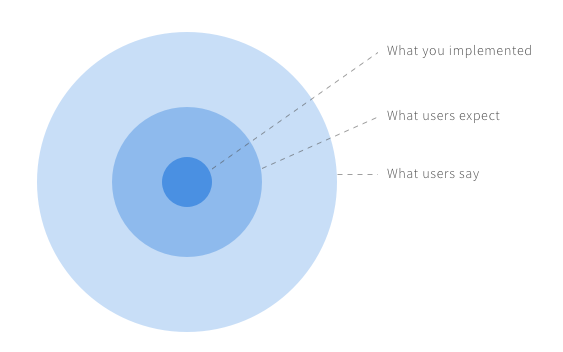

The scope gap

At the root of the problem is a gap between what the users of the chatbot expect it to be able to answer and what the team behind the chatbot expected users would ask.

Good advice when starting with chatbots is to keep-it-small. Just implement a few use cases. The trouble with this is that users usually expect more. Especially when your chatbot is on the company website, users expect it to at least know about the things on the website.

Next to the topics, users expect your chatbot to cover, users also say a lot of off-topic things that can confuse the chatbot derailing the conversation. A basic level of conversational skills and small talk can help deal with this.

Think about a user saying, “That’s great!” mid-conversation. The bot should be able to respond with “Good to hear.” and continue where it left off instead of breaking with “Sorry I don’t understand.” leaving the user wondering what to do next.

A lot of chatbots get derailed by chit chat like “uhm…”

On a more technical note: training your chatbot on these off-topic utterances may also help in better classification of the on-topic intents as it explicitly trains the chatbot on what NOT to include in your domain topics.

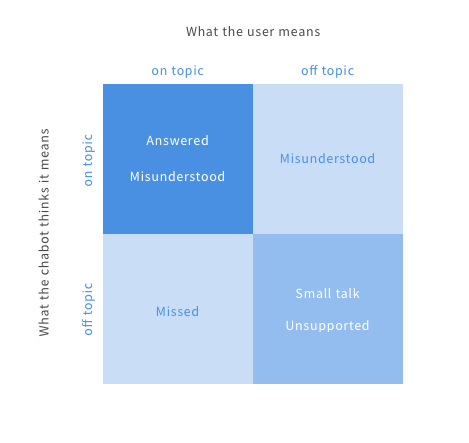

Confusion matrix

Besides the unsupported scope gap, there are many other reasons for confusion between the users and chatbots.

Chatbots don’t actually understand what users say; instead, they try to match what the user says to the utterances it has seen during its training. This is done either using handcrafted rules or machine learning. Both are prone to misclassification:

- Unsupported: the chatbot was not trained for this case and as such can’t reply.

- Missed: the chatbot was trained for the case but missed to classify it as such.

- Misunderstood: the chatbot incorrectly classified the intent either as off-topic or to a wrong on-topic intent.

When you release your chatbot to the public, you have to monitor the conversation transcripts closely to detect the misunderstood, unsupported, and missed user intents.

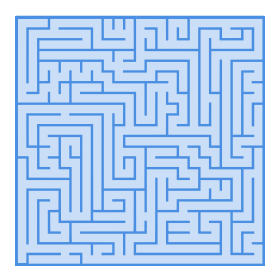

Dead-ends

Chatbots WILL make mistakes. How it deals with those situations makes the difference between a user having a suboptimal experience that ended well and one that is just frustrated with no closure.

Chatbot frustrations arise from misplacement the user can’t navigate out of.

When a user gets the message: “Sorry, I don’t understand.” without any suggestions or means of navigating out of this situation, this creates a dead-end conversation. Adding the hint “Try rephrasing your question” may tempt the user to try again, but without any hints, this may only lead to more agitation.

Bot stubbornness

Another case is where the chatbot thinks it understands the user, immediately commits, and stubbornly chases the goal it set for the user, not allowing the user to change course.

If there is one thing that computers are good at, it is repeating themselves. When interacting with humans, this may not be the best trait. Most users give up after 3 times.

Chatbots are really good at repeating themselves; users are not

Now that we know why the interaction between chatbots and humans sometimes breaks down; how can we design a more perceptive and well-behaved chatbot?

Dealing with the scope gap

So, how do we deal with the gap between the user’s expectations and the chatbot’s understanding?

First and foremost: be as clear as possible about what cases the chatbot can support. Use the opening statement: “Hi, I am a chatbot here to help you do X, Y and Z” as well as the menu prompt: “What can I help you with? X, Y, or Z”.

You obviously cannot enumerate all the possible intents the chatbot can handle here. Make sure to capture at least the root intents like: “Ask a question” or “Leave a message”.

“Asking a question” is still a very large scope. In the next prompt you can enumerate some of the most asked questions to provide some initial suggestions. Still chances are that the question the user asks cannot be matched to a direct reply.

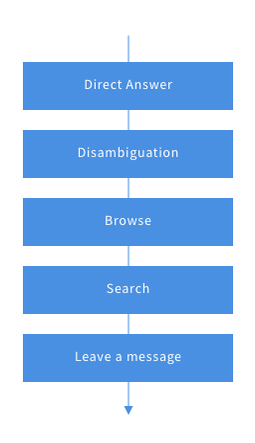

With our base bot we use a layered fallback approach to answering:

-

First, try to match the user’s question to a direct answer: for instance, a Dialogflow intent that matches with confidence set to > 0.6.

-

If there is no matching intent, see if you can match a topic and offer steps to help the user to clarify what they are looking for.

-

Present relevant intents or knowledge articles (FAQs) to the user in a list. The user can potentially pick the right answer and help with training the chatbot.

-

In the background search through unstructured information like the website or documents that were provided. Present the top 3.

-

Finally if none of the above resolved the users’ query present an option to leave a message with their initial question pre-filled.

Having this type of layered fallback you can deal with a larger scope of questions, meeting the customer expectation, with minimal effort. This means you can “keep it small” and go live earlier getting the valuable feedback and insights.

Providing a leave-a-message or even better: human-handover means that the user always gets a way to get their question answered.

Being more perceptive instead of stubborn

Now the above method of increasing the surface means there are more answers to give but won’t necessarily make the chatbot more perceptive.

It’s essential to present the knowledge articles and search results so it’s quick to scan through and ignore if not applicable. Also, give the user controls to provide feedback. A quick optional thumbs up or down allows users to give quick feedback.

This way, the chatbot can escalate the conversation to human takeover or leave a message, and it also allows you to capture labeled training data.

In more general terms, the chatbot should always listen for user feedback like “That’s not what I meant” or allow the user to steer the chatbot back to the previous state. Chatbots should implement a set of “conversation control commands” - stop, cancel, back, skip, help that allow users to navigate and discover the mazes of your bot.

Most chatbots are goal-driven, meaning they detect the user’s intent and try to fulfill it. When the chatbot is too eager to commit, the user may feel out of control. Before committing to a goal, the chatbot should confirm the user’s intent: “I understand you want X, shall I action it? “

Blueprint

Creating a chatbot that allows users to navigate the conversation easily, and that is perceptive to user feedback can be a challenge. Especially if you have to build it all from scratch.

This is why we have created a blueprint with auto-discovery, a basic level of conversational skills, and progressive fallback build built right in. In the next blog article, we will extend on this. If you are eager to find out…

Sign up for a free account on our platform and get started using our BaseBot template and built-in skills.